|

Entries tagged debian-administration

29 December 2006 21:50

Only three things to say today:

- Second anniversary

Today marks the second anniversary of the Debian Administration website.

(I think that this is the first public mention of my intention to setup the site. It was the earliest I could find anyway.)

- Edinburgh Trivia

Doing my own small bit for Debconf I went for a walk on Christmas Day.

For two years I've lived in the area of Edinburgh known as Leith. (Famous for being a port, and being a port of call for hookers)

During that time I've taken buses, and walked, up and down Leith walk hundreds of times. Almost every time I do I notice a large chimney by The Edinburgh Playhouse Theatre.. The top 20/30ft of the chimney can be seen from peeking over the top of local buildings, assuming you're not blocked by other buildings. And now?

Now I know what it sits upon.

My little adventure in getting to know the city I've been living in; I wanted to find the bottom of the chimney and see what it was attached to. So if anybody coming to Debconf next year gets massively lost and curious about a large chimney en route to my house .. I can tell them all about it.

It is the little things in life .. - Xen Shell

I'm not sure that there are many people using this, the freshmeat listing has a few subscribers but I'm never sure how well that translates. (Plus of course nobody rated the software so it might be they hate it?). Anyway there are almost certainly far fewer users of the shell than the main xen-tools package - but as of last night the shell now allows you to control more than one Xen instance.

This is quite a large change, since previously it explicitly only supported one user controlling one Xen instance. Expect a new release shortly once I've tested it more, and updated the documentation.

Tags: debian-administration, edinburgh, xen-shell

|

22 May 2007 21:50

I was suprised to see Michal Čihař suggesting that memcached wasn't helping his site enough.

I've been a huge fan of Danga's Memcached for the past year or two. I'm using it very heavily upon the Debian Administration website, and also upon my new dating site.

There are, of course, issues with making sure you flush the cache when things are updated, but I've got a good pair of test suites for that now :)

With a nice singleton accessor I can use the code as easily as:

1

2

3

4

5

6

7

8

9

10

11

|

sub recent_users

{

my $cache = Singleton::Memcache->instance();

my $results = $cache->get( "recent_users" );

return( $results ) if ( $results );

# fetch from database

$cache->set( "recent_users", $results );

return( $results );

} |

Obvious once you've done it a few times, but right now I can feel the difference if I disable the cache upon either of the sites. Page loadtime just drops.

I guess the effectiveness depends upon the site you're using, and how often things can be usefully cached. I know that for my uses I tend to have large items which would be expensive to fetch which occur on every page (such as "currently online users", or "recent weblogs".) That probably means I benefit more than others, still I've become so enamoured of the project I feel the need to pimp it a little!

Tags: debian-administration, local-people, memcached

|

26 October 2007 21:50

I made a new release of the Chronicle blog compiler the other day, which seems to be getting a suprising number of downloads from my apt repository.

The apt repository will be updated shortly to drop support for Sarge, since in practise I've not uploaded new things there for a while.

In other news I made some new code for the Debian Administration website! The site now has the notion of a "read-only" state. This state forbids new articles from being posted, new votes being cast, and new comments being posted.

The read-only state is mostly designed for emergencies, and for admin work upon the host system (such as when I'm tweaking the newly installed search engine).

In more coding news I've been updating the xen-shell a little recently, so it will shortly have the ability to checksum the filesystem of Xen guests - and later validate them. This isn't a great security feature because it assumes you trust dom0 - and more importantly to checksum files your guest must be shutdown.

However as a small feature I believe the suggestion was an interesting one.

Finally I've been thinking about system exploitation via temporary file abuse. There are a couple of cases that are common:

- Creation of an arbitrary (writeable) file upon a host.

- Creation of an arbitrary (non-writable) file upon a host.

- Truncation of an existing file upon a host.

Exploiting the first to go from user to root access is trivial. But how would you exploit the last two?

Denial Of Service attacks are trivial via the creation/truncation of /etc/nologin, /etc/shadow, (or even /boot/grub/menu.lst! But gaining privileges? I can't quite see how.

Comments welcome!

Tags: chronicle, debian-administration, security, xen-shell

|

21 May 2008 21:50

Recently I have mostly been "behind". I've caught up a little on what I wanted to do though over the past couple of days, so I won't feel too bad.

I've:

made a new release of the chronicle blog compiler, after recieving more great feedback from MJ Ray.

un-stalled the Planet Debian.

updated the weblogs hosted by Debian Administration, after help and suggestions from Daniel Kahn Gillmor.

stripped, cleaned, and tested a new steam engine. Nearly dying in the process.

discovered a beautiful XSS attack against a popular social networking site, then exploited that en masse to collect hundreds of username/password pairs - all because the site admins said "Prove it" when I reported the hole. Decisions decisions .. what to do with the list...

released a couple of woefully late DSAs.

started learning British Sign Language.

Anyway I've been bad and not writing much recently on the Debian Administration site, partly because I'm just sick of the trolling comments that have been building up, and partly due to general lack of time. I know I should ignore them, and I guess by mentioning them here I've kinda already lost, but I find it hard to care when random folk are being snipy.

Still I've remembed that some people are just great to hear from. I know if I see mail from XX they will offer an incisive, valid, criticism or a fully tested and working patch. Sometimes both at the same time.

In conclusion I need my pending holiday in the worst way; and I must find time to write another letter...

ObQuote: Dungeons & Dragons

Tags: chronicle, debian-administration, done, steam, xss

|

18 July 2008 21:50

Over the past few nights I've managed to successfully migrate the Debian Administration website to the jQuery javascript library

This means that my own javascript library code has been removed, replaced, and improved!

The site itself doesn't use very much javascript - there are a couple of places where focus is set to a couple of elements, but other than that we're only talking about:

Still there are a couple of enhancements that I've got planned which will make the site neater and more featureful for those users who've chosen to enable javascript in their browsers.

Here's my list of previous javascript usage - out of date now that I've basically chosen to use jQuery for everything.

ObQuote: Short Circuit.

Tags: debian-administration, javascript, jquery

|

30 March 2009 21:50

All being well the Debian Administration website now fully supports UTF-8.

This change was a long time coming, considering the amount of time the site has been live.

Most of the changes have been present for a while:

- Correctly setting the database to store UTF-8 internally, rather than latin1.

- Correctly setting the charset of the generated pages.

The only missing part was ensuring the at the text input by visitors/users was correctly decoded and treated as UTF-8. This was handled by updating changing the Perl CGI module to explicitly call charset appropriately.

Since the code behind the site masks the database, memcached, and CGI handles behind singletons the change itself was pretty trivial:

I made more changes this evening to tie it all together, and to ensure that my Database connection is always forced to use UTF but I think that wasn't so important.

I hope this is vaguely useful the next time I have to fight with character sets & encodings. It is just all so nasty. Failing that these pages are vaguely useful:

ObFilm: Run Lola Run

Tags: debian-administration, utf, utf-8, utf8

|

25 October 2009 21:50

Over the past couple of months the machine which hosts the Debian Administration website has been struggling with two distinct problems:

- The dreaded scheduler bug/issue

The machine would frequently hang with the messages of the form:

Task xxx blocked for more than 120 seconds

This would usually require the application of raised elephants to recover from.

- OOM-issues

The system would exhaust the generous 2Gb of memory it possessed, and start killing random tasks until the memory usage fell - at which point the server itself stopped functioning in a useful manner.

Hopefully these problems are now over:

The combination of these two changes should resolve the memory issues, and I've installed a home-made 2.6.31.4 kernel which appears to have corrected the task-blocking scheduler issue.

ObTitle: Bridget Jones: The Edge of Reason

Tags: apache2, bytemark, debian-administration, nginx, performance

|

31 December 2011 21:50

I've been informed by a couple of people that the Debian Administration site is down. Sadly it is; at the moment the host isn't showing anything on the serial console and remotely power-cycling it isn't showing any signs of life.

At this time of year I don't want to drag anybody in to take care of it, so ETA on recovery/replacement hardware is Monday/Tuesday.

In other news I've made it to year five of the KVM hosting sub-project/thing. Originally started as a Xen host its been running happily for quite some time. I suspect next year, or the year after that the price/specification ratio will end up losing out and we'll cancel the whole thing - but there are no immediate reasons to make any change.

Finally I knocked up a simple tool to validate my TinyDNS records prior to uploading them. It is simplistic, but adequate to catch the kind of mistakes I make:

Honestly it probably wants to be rationalised a little more - and check records more carefully. e.g. Ensure that the host a CNAME refers to itself exists, and making sure that the nameservers specified are valid.

I just wanted to make something quick after accidentally uploading a zonefile where I'd managed to fat-finger several important records. le sigh.

Oddly enough asking on serverfault.com showed no real suggestions - other than actually running tinydns locally and doing a zone-xfer to validate records. Overkill and harder than I'd like.

Happy New year if you care about such things..

"I finished growing up, Léon. I just get older. " - Leon

Tags: debian-administration, kvm-hosting, tinydns

|

6 January 2013 21:50

The new version of the Debian Administration is almost ready now. I'm just waiting on some back-end changes to happen on the excellent BigV hosting product.

I was hoping that the migration would be a fun "Christmas Project", but I had to wait for outside help once or twice and that pushed things back a little. Still it is hard to be anything other than grateful to folk who volunteer time, energy, and enthusiasm.

Otherwise this week has largely consisted of sleeping, planting baby spider-plants, shuffling other plants around (Aloe Vera, Cacti, etc), and enjoying my new moving plant (video isn't my specific plant).

I've spent too long reworking templer such that is now written in a modular fashion and supports plugins. The documentation is overhauled.

The only feedback I received was that it should support inline perl - so I added that as a plugin this morning via a new formatter plugin:

Title: This is my page title

Format: perl

Name: Steve

----

This is my page. It has inline perl:

The sum of 1 + 5 is { 1 + 5 }

This page was written by { $name }

ObQuote: "She even attacked a mime. Just found out about it. Seems the mime had been reluctant to talk. " - Hexed

Tags: debian, debian-administration, templer

|

22 January 2013 21:50

I want to like LDAP. Every so often I do interesting things with it, and I start to think I like it, then some software that claims to support LDAP fails to do so properly and I remember I hate it again.

I guess the problem with LDAP is that most people are scared by it, unless you reach a certain level of scale you don't need it. That makes installing it out of the blue a scary prospect, and that means that lots of toy-software applications don't even consider using it until they're mature and large.

When you bolt-on support for LDAP to an existing project you have to make compromises; do you create local entries in your system for these scary-remote-LDAP-users? Do you map group members from LDAP into your own group system? ANd so on.

To be fair to the application developers if the requirements for installation were "Install LDAP" they'd probably have a damn smaller userbase, and so we cannot blame OpenLDAP, or the other servers.

All the same it is a shame.

The very next piece of software I ever write that needs to handle logins will use LDAP and only LDAP. How hard can it be?

In happier news I re-deployed http://www.debian-administration.org/ over the weekend. It now uses the Bytemark BigV platform which rocks.

The migration was supposed to be a "Christmas Project", but took longer than expected due to the number of changes I need to make to the software, and my deployment plan. Still I'm very happy with the way things are running now, and don't expect I'll need to move or make significant changes for the next nine years. I just hope there is still interest in such things then.

ObQuote: "Would you like a treatment? " - Dollhouse

Tags: debian-administration, ldap, misc

|

21 August 2014 21:50

Recently I've been getting annoyed with the Debian Administration website; too often it would be slower than it should be considering the resources behind it.

As a brief recap I have six nodes:

- 1 x MySQL Database - The only MySQL database I personally manage these days.

- 4 x Web Nodes.

- 1 x Misc server.

The misc server is designed to display events. There is a node.js listener which receives UDP messages and stores them in a rotating buffer. The messages might contain things like "User bob logged in", "Slaughter ran", etc. It's a neat hack which gives a good feeling of what is going on cluster-wide.

I need to rationalize that code - but there's a very simple predecessor posted on github for the curious.

Anyway enough diversions, the database is tuned, and "small". The misc server is almost entirely irrelevent, non-public, and not explicitly advertised.

So what do the web nodes run? Well they run a lot. Potentially.

Each web node has four services configured:

- Apache 2.x - All nodes.

- uCarp - All nodes.

- Pound - Master node.

- Varnish - Master node.

Apache runs the main site, listening on *:8080.

One of the nodes will be special and will claim a virtual IP provided via ucarp. The virtual IP is actually the end-point visitors hit, meaning we have:

| Master Host | Other hosts |

Running:

|

Running:

|

Pound is configured to listen on the virtual IP and perform SSL termination. That means that incoming requests get proxied from "vip:443 -> vip:80". Varnish listens on "vip:80" and proxies to the back-end apache instances.

The end result should be high availability. In the typical case all four servers are alive, and all is well.

If one server dies, and it is not the master, then it will simply be dropped as a valid back-end. If a single server dies and it is the master then a new one will appear, thanks to the magic of ucarp, and the remaining three will be used as expected.

I'm sure there is a pathological case when all four hosts die, and at that point the site will be down, but that's something that should be atypical.

Yes, I am prone to over-engineering. The site doesn't have any availability requirements that justify this setup, but it is good to experiment and learn things.

So, with this setup in mind, with incoming requests (on average) being divided at random onto one of four hosts, why is the damn thing so slow?

We'll come back to that in the next post.

(Good news though; I fixed it ;)

Tags: brightbox, debian-administration, yawns

|

23 August 2014 21:50

So I previously talked about the setup behind Debian Administration, and my complaints about the slownes.

The previous post talked about the logical setup, and the hardware. This post talks about the more interesting thing. The code.

The code behind the site was originally written by Denny De La Haye. I found it and reworked it a lot, most obviously adding structure and test cases.

Once I did that the early version of the site was born.

Later my version became the official version, as when Denny setup Police State UK he used my codebase rather than his.

So the code huh? Well as you might expect it is written in Perl. There used to be this layout:

yawns/cgi-bin/index.cgi

yawns/cgi-bin/Pages.pl

yawns/lib/...

yawns/htdocs/

Almost every request would hit the index.cgi script, which would parse the request and return the appropriate output via the standard CGI interface.

How did it know what you wanted? Well sometimes there would be a paramater set which would be looked up in a dispatch-table:

/cgi-bin/index.cgi?article=40 - Show article 40

/cgi-bin/index.cgi?view_user=Steve - Show the user Steve

/cgi-bin/index.cgi?recent_comments=10 - Show the most recent comments.

Over time the code became hard to update because there was no consistency, and over time the site became slow because this is not a quick setup. Spiders, bots, and just average users would cause a lot of perl processes to run.

So? What did I do? I moved the thing to using FastCGI, which avoids the cost of forking Perl and loading (100k+) the code.

Unfortunately this required a bit of work because all the parameter handling was messy and caused issues if I just renamed index.cgi -> index.fcgi. The most obvious solution was to use one parameter, globally, to specify the requested mode of operation.

Hang on? One parameter to control the page requested? A persistant environment? What does that remind me of? Yes. CGI::Application.

I started small, and pulled some of the code out of index.cgi + Pages.pl, and over into a dedicated CGI::Application class:

- Application::Feeds - Called via /cgi-bin/f.fcgi.

- Application::Ajax - Called via /cgi-bin/a.fcgi.

So now every part of the site that is called by Ajax has one persistent handler, and every part of the site which returns RSS feeds has another.

I had some fun setting up the sessions to match those created by the old stuff, but I quickly made it work, as this example shows:

The final job was the biggest, moving all the other (non-feed, non-ajax) modes over to a similar CGI::Application structure. There were 53 modes that had to be ported, and I did them methodically, first porting all the Poll-related requests, then all the article-releated ones, & etc. I think I did about 15 a day for three days. Then the rest in a sudden rush.

In conclusion the code is now fast because we don't use CGI, and instead use FastCGI.

This allowed minor changes to be carried out, such as compiling the HTML::Template templates which determine the look and feel, etc. Those things don't make sense in the CGI environment, but with persistence they are essentially free.

The site got a little more of a speed boost when I updated DNS, and a lot more when I blacklisted a bunch of IP-space.

As I was wrapping this up I realized that the code had accidentally become closed - because the old repository no longer exists. That is not deliberate, or intentional, and will be rectified soon.

The site would never have been started if I'd not seen Dennys original project, and although I don't think others would use the code it should be possible. I remember at the time I was searching for things like "Perl CMS" and finding Slashcode, and Scoop, which I knew were too heavyweight for my little toy blog.

In conclusion Debian Administration website is 10 years old now. It might not have changed the world, it might have become less relevant, but I'm glad I tried, and I'm glad there were years when it really was the best place to be.

These days there are HowtoForges, blogs, spam posts titled "How to install SSH on Trusty", "How to install SSH on Wheezy", "How to install SSH on Precise", and all that. No shortage of content, just finding the good from the bad is the challenge.

Me? The single best resource I read these days is probably LWN.net.

Starting to ramble now.

Go look at my quick hack for remote command execution https://github.com/skx/nanoexec ?

Tags: debian-administration, yawns

|

29 November 2015 21:50

This weekend I've mostly been tidying up some personal projects and things.

- http://debian-administration.org/

-

This was updated to use recaptcha on the sign-up page, which is my attempt to cut down on the 400+ spam-registrations it receives every day.

I've purged a few thousand bogus-accounts, which largely existed to point to spam-sites in their profile-pages. I go through phases where I do this, but my heuristics have always been a little weak.

- http://dhcp.io/

-

This site offers free dynamic DNS for a few hundred users. I closed fresh signups due to it being abused by spammers, but it does have some users and I sometimes add new people who ask politely.

Unfortunately some users hammer it, trying to update their DNS records every 60 seconds or so. (One user has spent the past few months updating their IP address every 30 seconds, ironically their external IP hadn't changed in all that time!)

So I suspended a few users, and implemented a minimum-update threshold: Nobody can update their IP address more than once every fifteen minutes now.

- Literate Emacs Configuration File

-

Working towards my stateless home-directory I've been tweaking my dotfiles, and the last thing I did today was move my Emacs configuration over to a literate fashion.

My main emacs configuration-file is now a markdown file, which contains inline-code. The inline-code is parsed at runtime, and executed when Emacs launches. The init.el file which parses/evals is pretty simple, and I'm quite pleased with it. Over time I'll extend the documantion and move some of the small snippets into it.

- Offsite backups

My home system(s) always had a local backup, maintained on an external 2Tb disk-drive, along with a remote copy of some static files which were maintained using rsync. I've now switched to having a virtual machine host the external backups with proper incrementals - via attic, which beats my previous "only one copy" setup.

- Virtual Machine Backups

-

On a whim a few years ago I registered rsync.io which I use to maintain backups of my personal virtual machines. That still works, though I'll probably drop the domain and use backup.steve.org.uk or similar in the future.

FWIW the external backups are hosted on BigV, which gives me a 2Tb "archive" disk for a £40 a month. Perfect.

Tags: debian-administration, misc

|

7 February 2016 21:50

I'm slowly planning the redesign of the cluster which powers the Debian Administration

website.

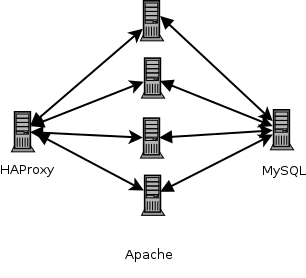

Currently the design is simple, and looks like this:

In brief there is a load-balancer that handles SSL-termination and

then proxies to one of four Apache servers. These talk back and forth

to a MySQL database. Nothing too shocking, or unusual.

(In truth there are two database servers, and rather than a single

installation of HAProxy it runs upon each of the webservers - One is

the master which is

handled via ucarp. Logically though traffic routes through

HAProxy to a number of Apache instances. I can lose half of the

servers and things still keep running.)

When I setup the site it all ran on one host, it was simpler, it

was less highly available. It also struggled to cope with the

load.

Half the reason for writing/hosting the site in the first place was

to document learning experiences though, so when it came to time to

make it scale I figured why not learn something and do it

neatly? Having it run on cheap and

reliable virtual hosts was a good excuse to bump the server-count

and the design has been stable for the past few years.

Recently though I've begun planning how it will be deployed in the

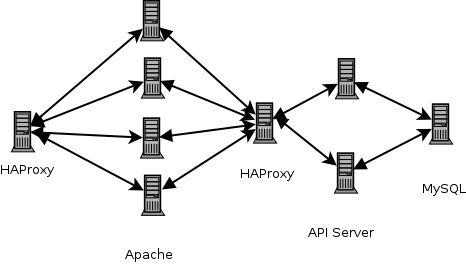

future and I have a new design:

Rather than having the Apache instances talk to the database I'll

indirect through an API-server. The API server will handle requests

like these:

- POST /users/login

- POST a username/password and return 200 if valid. If bogus

details return 403. If the user doesn't exist return 404.

- GET /users/Steve

- Return a JSON hash of user-information.

- Return 404 on invalid user.

I expect to have four API handler endpoints: /articles,

/comments, /users & /weblogs. Again we'll use a

floating IP and a HAProxy instance to route to multiple API-servers.

Each of which will use local caching to cache articles, etc.

This should turn the middle layer, running on Apache, into simpler

things, and increase throughput. I suspect, but haven't confirmed,

that making a single HTTP-request to fetch a (formatted) article body

will be cheaper than making N-database queries.

Anyway that's what I'm slowly pondering and working on at the

moment. I wrote a proof of

concept API-server based CMS two years ago, and my recollection of

that time is that it was fast to develop, and easy to scale.

Tags: cluster, debian-administration, haproxy

|

11 September 2017 21:50

After 13 years the Debian-Administration website will be closing down towards the end of the year.

The site will go read-only at the end of the month, and will slowly be stripped back from that point towards the end of the year - leaving only a static copy of the articles, and content.

This is largely happening due to lack of content. There were only two articles posted last year, and every time I consider writing more content I lose my enthusiasm.

There was a time when people contributed articles, but these days they tend to post such things on their own blogs, on medium, on Reddit, etc. So it seems like a good time to retire things.

An official notice has been posted on the site-proper.

Tags: debian-administration

|

21 September 2017 21:50

So previously I've documented the setup of the Debian-Administration website, and now I'm going to retire it I'm planning how that will work.

There are currently 12 servers powering the site:

- web1

- web2

- web3

- web4

- These perform the obvious role, serving content over HTTPS.

- public

- This is a HAProxy host which routes traffic to one of the four back-ends.

- database

- This stores the site-content.

- events

- There was a simple UDP-based protocol which sent notices here, from various parts of the code.

- e.g. "Failed login for bob from 1.2.3.4".

- mailer

- Sends out emails. ("You have a new reply", "You forgot your password..", etc)

- redis

- This stored session-data, and short-term cached content.

- backup

- This contains backups of each host, via Obnam.

- beta

- A test-install of the codebase

- planet

- The blog-aggregation site

I've made a bunch of commits recently to drop the event-sending, since no more dynamic actions will be possible. So events can be retired immediately. redis will go when I turn off logins, as there will be no need for sessions/cookies. beta is only used for development, so I'll kill that too. Once logins are gone, and anonymous content is disabled there will be no need to send out emails, so mailer can be shutdown.

That leaves a bunch of hosts left:

- database

- I'll export the database and kill this host.

- I will install mariadb on each web-node, and each host will be configured to talk to localhost only

- I don't need to worry about four database receiving diverging content as updates will be disabled.

- backup

- planet

- This will become orphaned, so I think I'll just move the content to the web-nodes.

All in all I think we'll just have five hosts left:

public to do the routingweb1-web4 to do the serving.

I think that's sane for the moment. I'm still pondering whether to export the code to static HTML, there's a lot of appeal as the load would drop a log, but equally I have a hell of a lot of mod_rewrite redirections in place, and reworking all of them would be a pain. Suspect this is something that will be done in the future, maybe next year.

Tags: debian-administration

|

28 January 2020 12:20

So recently I talked about how I was moving my email to a paid GSuite account, that process has now completed.

To recap I've been paying approximately €65/month for a dedicated host from Hetzner:

- 2 x 2Tb drives.

- 32Gb RAM.

- 8-core CPU.

To be honest the server itself has been fine, but the invoice is a little horrific regardless:

- SB31 - €26.05

- Additional subnet /27 - €26.89

I'm actually paying more for the IP addresses than for the server! Anyway I was running a bunch of virtual machines on this host:

- mail

- Exim4 + Dovecot + SSH

- I'd SSH to this host, daily, to read mail with my console-based mail-client, etc.

- www

- Hosted websites.

- Each different host would run an instance of lighttpd, serving on localhost:XXX running under a dedicated UID.

- Then Apache would proxy to the right one, and handle SSL.

- master

- Puppet server, and VPN-host.

- git

- ..

- Bunch more servers, nine total.

My plan is to basically cut down and kill 99% of these servers, and now I've made the initial pass:

I've now bought three virtual machines, and juggled stuff around upon them. I now have:

debian - €3.00/month

dns - €3.00/month

- This hosts my commercial DNS thing

- Admin overhead is essentially zero.

- Profit is essentially non-zero :)

shell - €6.00/month

- The few dynamic sites I maintain were moved here, all running as

www-data behind Apache. Meh.

- This is where I run cron-jobs to invoke rss2email, my google mail filtering hack.

- This is also a VPN-provider, providing a secure link to my home desktop, and the other servers.

The end result is that my hosting bill has gone down from being around €50/month to about €20/month (€6/month for gsuite hosting), and I have far fewer hosts to maintain, update, manage, and otherwise care about.

Since I'm all cloudy-now I have backups via the provider, as well as those maintained by rsync.net. I'll need to rebuild the shell host over the next few weeks as I mostly shuffled stuff around in-place in an adhoc fashion, but the two other boxes were deployed entirely via Ansible, and Deployr. I made the decision early on that these hosts should be trivial to relocate and they have been!

All static-sites such as my blog, my vanity site and similar have been moved to netlify. I lose the ability to view access-logs, but I'd already removed analytics because I just don't care,. I've also lost the ability to have custom 404-pages, etc. But the fact that I don't have to maintain a host just to serve static pages is great. I was considering using AWS to host these sites (i.e. S3) but chose against it in the end as it is a bit complex if you want to use cloudfront/cloudflare to avoid bandwidth-based billing surprises.

I dropped MX records from a bunch of domains, so now I only receive email at steve.fi, steve.org.uk, and to a lesser extent dns-api.com. That goes to Google. Migrating to GSuite was pretty painless although there was a surprise: I figured I'd setup a single user, then use aliases to handle the mail such that:

- debian@example -> steve

- facebook@example -> steve

- webmaster@example -> steve

All told I have about 90 distinct local-parts configured in my old Exim setup. Turns out that Gsuite has a limit of like 20 aliases per-user. Happily you can achieve the same effect with address maps. If you add an address map you can have about 4000 distinct local-parts, and reject anything else. (I can't think of anything worse than having wildcard handling; I've been hit by too many bounce-attacks in the past!)

Oh, and I guess for completeness I should say I also have a single off-site box hosted by Scaleway for €5/month. This runs monitoring via overseer and notification via purppura. Monitoring includes testing that websites are up, that responses contain a specific piece of text, DNS records resolve to expected values, SSL certificates haven't expired, & etc.

Monitoring is worth paying for. I'd be tempted to charge people to use it, but I suspect nobody would pay. It's a cute setup and very flexible and reliable. I've been pondering adding a scripting language to the notification - since at the moment it alerts me via Pushover, Email, and SMS-messages. Perhaps I should just settle on one! Having a scripting language would allow me to use different mechanisms for different services, and severities.

Then again maybe I should just pay for pingdom, or similar? I have about 250 tests which run every two minutes. That usually exceeds most services free/cheap offerings..

Tags: aws, debian, debian-administration, gmail, google, hetzner, hosting, netlify, pingdom, s3, servers

|

1 November 2020 13:00

Back in 2017 I announced that the https://Debian-Administration.org website was being made read-only, and archived.

At the time I wrote a quick update to save each requested page as a flat-file, hashed beneath /tmp, with the expectation that after a few months I'd have a complete HTML-only archive of the site which I could serve as a static-website, instead of keeping the database and pile of CGI scripts running.

Unfortunately I never got round to archiving the pages in a git-repository, or some other store, and I usually only remembered this local tree of content was available a few minutes after I'd rebooted the server and lost the stuff - as the reboot would reap the contents of /tmp!

Thinking about it today I figured I probably didn't even need to do that, instead I just need to redirect to the wayback machine. Working on the assumption that the site has been around for "a while" it should have all the pages mirrored by now I've made a "final update" to Apache:

RewriteEngine on

RewriteRule ^/(.*) "http://web.archive.org/web/https://debian-administration.org/$1" [R,L]

Assuming nobody reports a problem in the next month I'll retire the server and make a simple docker container to handle the appropriate TLS certificate renewal, and hardwire the redirection(s) for the sites involved.

Tags: apache, cgi, debian-administration, wayback-machine

|

|